Amplify Feedback with Continuous Performance

By: Christian Melendez

| May 14, 2018

As cloud becomes the norm, we’re letting others manage much of our infrastructures for us. Cloud providers offer common metrics like CPU, memory, storage, and networking so that you can stay up to date on the health of your system. And we worry less and less about those metrics with serverless architectures. But there remains a major concern that we can’t just trust to the cloud provider, and that’s our applications. We need our applications accompanied by a good set of internal metrics that will tell us about their health, and no one else can take care of that for us.

It doesn’t matter where our applications are hosted. If we don’t know how well things are running, we’ll continue to add features to the point that some users won’t be able to use them.

And performance issues usually aren’t evident until it’s too late. After the code has been released, problems start popping up. That’s why it’s important that we amplify feedback by assessing performance continuously. Shifting performance to the left will help you to think about performance early. It won’t just be an afterthought; rather, performance will be a feature of the application.

But what does all this mean? Let’s start by figuring out why we need to optimize application performance.

Why Is performance important?

When you’re visiting a webpage, if it doesn’t load fast, you just leave. If an app is taking to long to work, you just close it. If applications are making users struggle to satisfy their needs, they’ll go somewhere else. We don’t usually think about performance until we need to.

We might not think that our application will be the next Facebook or Twitter—two applications that receive millions of visits per hour or minute. But it shouldn’t matter how many people use our applications. We should always be looking to have an application that performs well.

If we do this, we’ll realize the benefit of continuously adding features to the application because we won’t constantly have to fix performance issues in production. Some people claim they don’t have to worry about performance because “premature optimization is the root of all evil.” On the other side, there are the people who obsessively try to improve performance, thinking that performance is a feature. Which way of thinking is the correct one? It depends. There are always trade-offs.

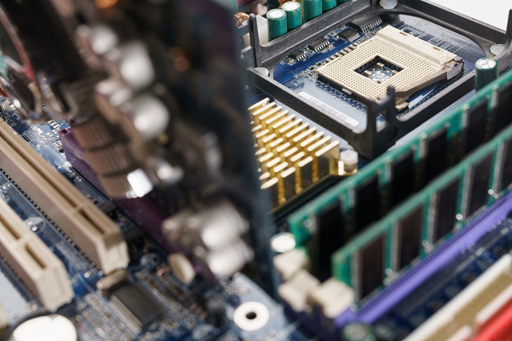

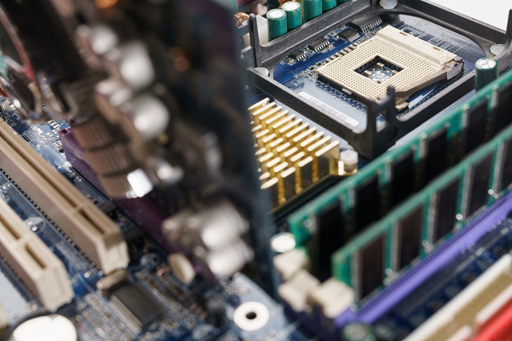

Performance is not a new focus. As an industry, we’ve always had to worry about it. Several years ago, we needed to make sure that our code could function with limited resources. Languages weren’t as mature as they are now, so a common issue was to have stack overflow memory issues. Modern languages hide all of that complexity for us, but that comes at a cost.

As someone who’s been on both sides of the fence, I’ve found that premature optimization isn’t worth it—but that doesn’t mean that it isn’t important. It’s cheaper and easier to apply a fix if any problems are visible at the very beginning of the workflow.

Also Read-https://stackify.com/grace-hopper-conference-recap/

Create a culture of continuous improvement

When we talk about culture, it all starts with management. If the people at the top don’t understand the impact of having applications with low performance, they’ll see it as a waste of time. To fully grasp the problems, they’ll need to see the numbers and have feedback. We’ll come back to that later—the importance of performance should be visible and supported by data.

Once management buys in, a performance-valuing culture needs to be spread everywhere. It’s not enough to say it’s important. You need to motivate developers by rewarding them when they do something to improve application performance. And I’m not even necessarily talking about a financial incentive. Sometimes a simple “Well done, thank you!” will give them recognition within the team and will make them feel that performance really matters. As Joe Duffy says in his performance culture post, “You get what you reward.”

In this regard, everyone should be clear about what success means. Do you need to optimize latency of an API to the lowest number possible? No. As long as that number is within the acceptable range, latency can still be considered successful. Performance needs to be constantly evaluated because if it isn’t, progress will be interrupted when the team needs to pay down some of the accumulated technical debt. This leads to the sometimes counterproductive idea that the team should throw everything out and start over with a greenfield project.

Only having a few people who truly care about performance isn’t a good idea. Folks think, “It’s not my problem. I’m not the performance-focused team member.” It can create a culture of blame. Performance should be everyone’s responsibility, and it should be a part of our day-to-day processes.

Start by shifting performance to the left

When I say you should shift development to the left, I simply mean you need to think about performance from the very beginning of the development process. We usually think about delivering first. When it comes to performance, we buy into one of the biggest lies we in IT tell ourselves: that we’ll do it later. In a way, this makes sense. You won’t know if what you’re building will be successful when it’s live. But if it is successful, you’ll have to be quick about fixing performance issues, or else you’ll lose the opportunity to get more customers. It’s tricky, I know.

So, what if we deliver quickly, and we continuously work on doing everything we can to make our applications better?

First, you need to build the machinery to deploy live changes quickly. If you deliver in small batches, you’ll be able to iterate more often and fix performance issues within hours instead of days or weeks because it’s easier to identify where the problem is. There will be fewer interruptions for the team because everyone will be taking care of issues as they arise; whereas normally developers would get interrupted immediately if there are problems in production—I’ve been there, and it’s frustrating.

You should start with your continuous integration (CI) pipeline. Don’t assume that the things you’re changing won’t affect performance. Measure performance all the time. Start by profiling the application on your machine. A good CI practitioner will not push untested code to master. Load testing should become part of your CI pipeline. You also need to include load tests for each environment in your continuous delivery (CD) pipeline. By simply making your different types of tests (unit, integration, smoke, etc.) run in parallel, you’ll be stressing the application. If you don’t automate it, you might only think about performance again when a problem arises.

Get feedback on each deployment

You don’t need to wait until users start noticing performance issues to do something about it. Here’s how feedback should work.

Feedback should accessible to everyone

After the application has been updated, you need to have feedback. Developers need feedback, but they don’t usually have access to production servers. So it’s operations folks’ job to help them to get visibility. One way of achieving that is by having a centralized logging tool everyone can access. There, people will be able to spot not only performance issues, but problems in general.

Application performance monitoring is key because you need to get more information about what’s really going on. For example, you might share context error information that will help your team spot issues in a database.

Or what about alerts? Without those, it’s hard to be proactive about fixing problems. Of course, I’m not talking about having noisy alerts. Rather, you should set up alerts based on error rates, latency issues, networking issues, page abandon rates—things that will tell you if something’s wrong.

…and it should be useful

When you have feedback not only after doing deployments to production but also in other similar environments, you reduce the chances of releasing features with poor performance. Collect everything you can get, like syslog events. There are tools like webpagetest.org that give you a decent context of a site. I’ve been a huge fan of that service, and just recently I learned that you can have those same tools in your own environment.

You can also get performance feedback when monitoring the applications from a different perspective—namely, from a business perspective. Sometimes problems aren’t evident when we look at the infrastructure or error logs, so we need to keep an eye on things like the number of orders made in the application. I once helped a team that had an automated process not just to validate deployments but also to collect application metrics that were important, from a revenue perspective.

Once you have good quality feedback, the solution to a problem becomes quite obvious. This allows you to act sooner rather than later. Let’s say that after analyzing your site, you notice that you need a content delivery network (CDN) to reduce page load time. Or if the database is now a bottleneck that needs more and more time to give data back, you might add a cache layer to reduce the load in the database.

Without having intentional feedback on each deployment, users will complain. And they don’t always complain right after you released something, when it’s convenient for you to fix it. They might complain at a moment when fixing performance problems is not only difficult, but also costly.

Learn and experiment because things will still go wrong

After you’ve been deploying constantly in small batches, including a certain type of performance testing in your pipeline, and getting feedback constantly so that it’s easier and cheaper to fix things, you might think that you’re covered. But things will still go wrong. It’s better if you’re prepared.

No production environment can beat the experience of real users. You might practice having a similar traffic load in previous environments, but you can’t predict tomorrow’s users. Even if you design and code the application in a way that can support 10 times the amount of users you typically have, things will always fail. Expect failure and embrace it.

Testing in production should be part of the process, not a phase that should be avoided and feared. It’s okay to wait for things to go live to really see how the application performs when receiving real usage. It will be hard and costly to have a copy of production where you can test changes. Quite frankly, you have other things to worry about. For example, you’ll be preparing the application to be resilient to failures. If the database is down, you should depend on the cache, a local copy, or default data. When something goes wrong, you learn. It’s okay to make mistakes; what’s not okay is failing to learn from them.

You’re not always going to be prepared to react when something goes wrong, and it might not be your fault. That’s why it’s important to be able to deploy quickly, and get feedback constantly. Implementing things like a circuit-breaker with Hystrix, doing canary releases, or using feature flags in the application will help you to be more resilient. As a bonus, only a fraction of your users will notice that something’s happening. Rollbacks should be quick, easy, and expected.

Everyone, not just operations folks, should know what a healthy system looks like. And more importantly, everyone should know what to do when it’s not healthy.

Performance is not static—It continuously changes

In order to have applications with good performance, you need to constantly work on your awareness, culture, and a good set of practices.

Start by setting up the basics

To amplify feedback with continuous performance, it’s necessary to know why performance is important. That will help everyone to understand why you need to load test, even if the belief is that the recent change won’t have any significant impact. Then, you need to make performance everyone’s job, not just something for a few people to handle. Spread the news that performance should not be an afterthought or only something you think about when doing big refactors.

When you have the basics down, you’re ready to include performance feedback from the very beginning of the process: things like developers profiling to locally spot bugs. You can start by reserving a time for doing load tests, but eventually, as the set of test cases grows, it could be a matter of running all of them in parallel.

…and then keep monitoring

Having feedback before releasing to production is important because you can fix things quickly and effortlessly. But feedback from production will tell you the real story, all the time. For that reason, everyone needs to have that visibility. Knowing where things are wrong instead of just knowing that things are wrong will make the difference in how much time you’ll need to fix problems. This is especially true for performance problems—those that the user might only understand as “the site is slow.”

Expecting failure will foster resiliency in the application code. Don’t get discouraged when things are still going wrong. Use those failures to learn and constantly experiment with how the application will react under duress. That will give you the feedback you need to be prepared for unintentional failures.

Performance is not something that you should take care of only when it’s needed, but it also shouldn’t be the only thing you care about. There should be a balance. But what you can’t have enough of is feedback. Work to get it, and get it continuously.

With APM, server health metrics, and error log integration, improve your application performance with Stackify Retrace. Try your free two week trial today

Improve Your Code with Retrace APM

Stackify's APM tools are used by thousands of .NET, Java, PHP, Node.js, Python, & Ruby developers all over the world.

Explore Retrace's product features to learn more.

Learn More